Swiss Year of Scientometrics Lecture: Opportunities and Challenges of Scientometrics–Part IV

This blog post is the last of a four part series based on the keynote presentation by Stefanie Haustein at the Swiss Year of Scientometrics lecture and workshop series at ETH Zurich on June 7, 2023.

Responsible use of metrics

As a last topic, I want to address a very important area that I think for a very long time has been ignored by the scientometric community—namely the use of the metrics that it creates and computes.

Research assessment reform

We all know that we live in a “publish or perish” culture, much of which has been created by quantitative measurements of scientific productivity and impact.

Metrics—such as the journal’s impact factor (JIF) and h-index—are used to make critical decisions about individuals, including review, promotion, tenure (RPT), funding, and academic awards. The importance of quantitative metrics has changed scholarly communication and publishing, and created a range of adverse effects, such as plagiarism, “salami publishing,” authorship for sale, and citation cartels.

These adverse effects can be described by Goodhart’s and Campbell’s laws. By setting a metric as a target and by linking that target to a reward structure, we create an incentive for that metric to be gamed.

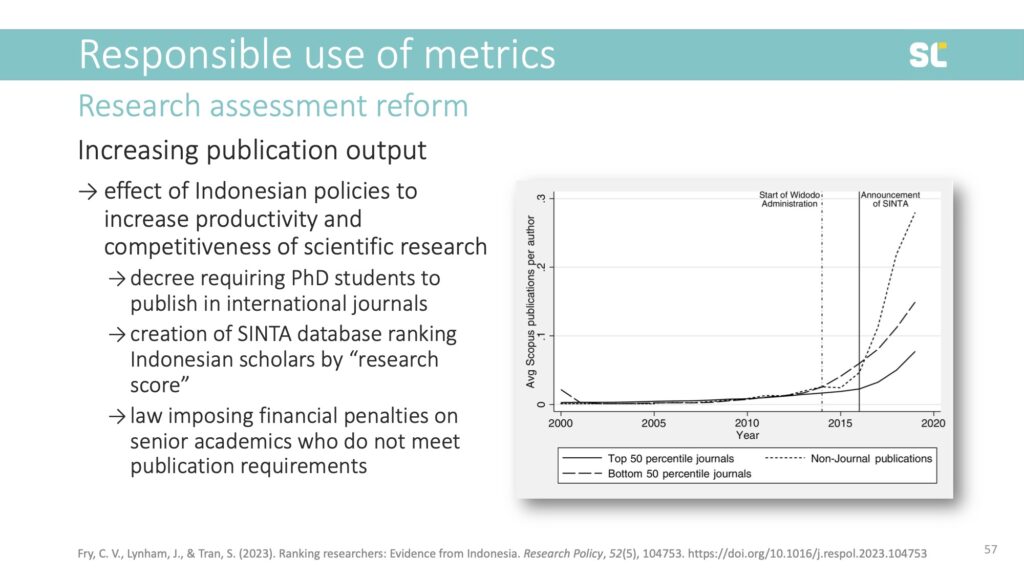

There are some examples of quantitative metrics that have actually reached desired goals of improving research productivity. A recent example comes from Indonesia, where policies instated by the Widodo administration increased the number of publications in Scopus-indexed journals.

The policies included a decree that requires PhD students to publish one paper in an international journal, the creation of the SINTA database indexing Indonesian publications and ranking scholars by a “research score,” and even a law imposing financial penalties on senior academics who do not meet a certain publication output. Below, you clearly see the increase in publication output after the policy change.

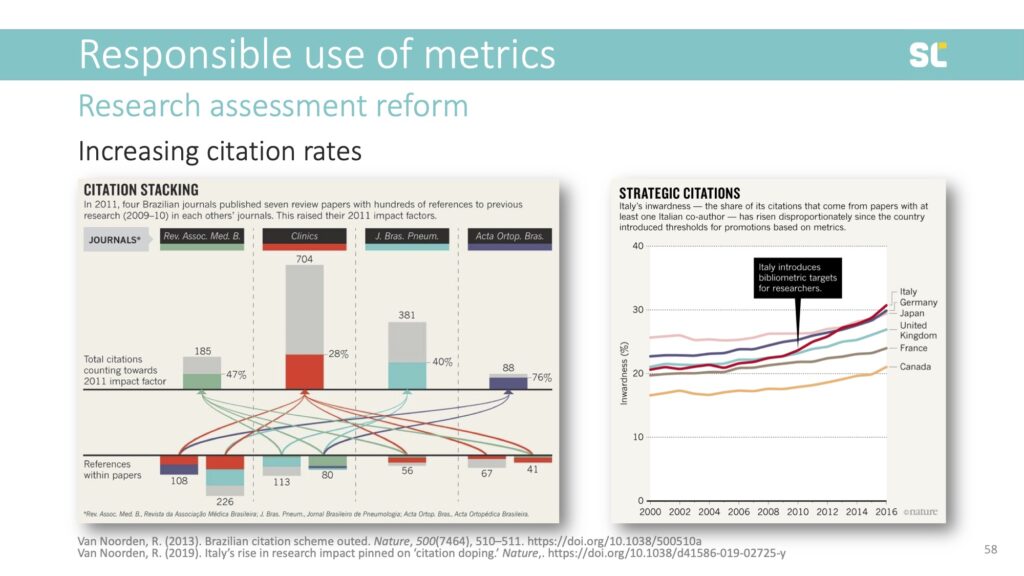

Unfortunately, we frequently see issues where researchers try to game metrics and metrics cause so-called adverse effects. In the slide below, you see an example of “citation stacking” (left image)—where journals try to increase their impact factors by citing each other’s papers published in the two previous years. Citation databases now monitor stacking and suspend journals. Another example is “citation doping” (right image), where you could see a significant rise in self-citations after Italy introduced bibliometric targets for researchers in 2010.

Since the current form of research assessment is harming research and researchers in all disciplines, there are some initiatives that are trying to reform it:

- The San Francisco Declaration on Research Assessment (DORA) is now turning 10 years

- The Leiden Manifesto for Research Metrics was launched in 2015 by the scientometric community

- The Coalition for Advancing Research Assessment (CoARA) was formed in 2022

For example, CoARA’s core commitments include using quantitative indicators only as a supplement to support peer review, abandoning inappropriate use of metrics (particularly the JIF and h-index), and avoiding the use of university rankings.

CoARA also prominently stresses the importance of evaluating practices, criteria, and tools of research assessment using solid evidence and state-of-the-art research on research, and making data openly available for evidence gathering and research.

These are all steps in the right direction but behavior change can take a long time, particularly in academia.

Metrics Literacies

Lastly, I want to talk about how we can educate researchers and research administrators about responsible use of scientometric indicators.

I want to introduce what we call “Metrics Literacies,” an integrated set of competencies, dispositions, and knowledge that empower individuals to recognize, interpret, critically assess, and effectively and ethically use scholarly metrics.

While Metrics Literacies are essential, there is a lack of effective and efficient educational resources. We argue that we need to educate researchers and research administrators about scholarly metrics.

In the context where every researcher is already overwhelmed with keeping up with the literature in their own fields, videos are more efficient and effective than text. Storytelling and personas can help to draw attention and increase retention.

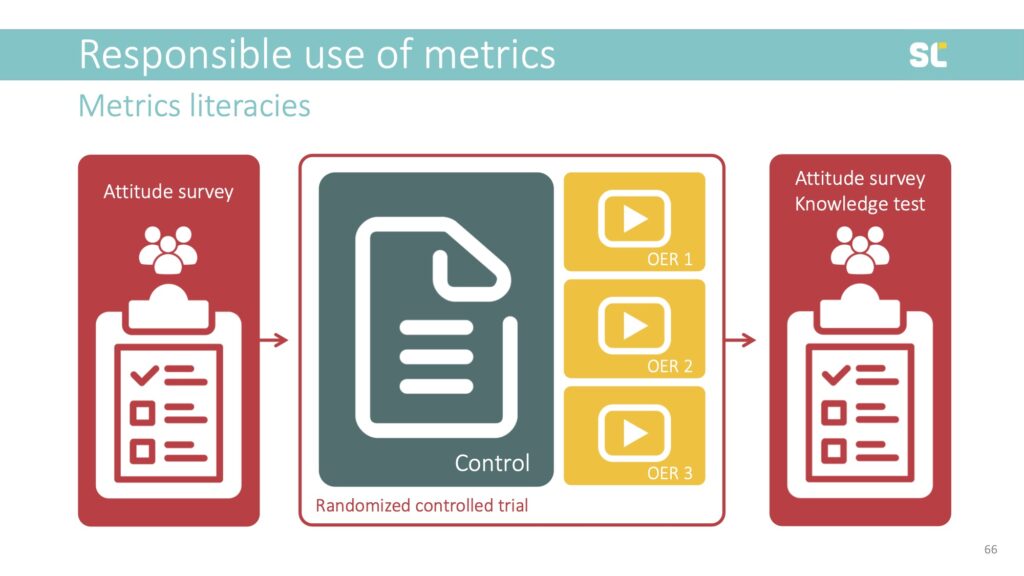

So we are currently planning a randomized controlled trial to test the effectiveness of different types of educational videos, using the h-index as an example.

Before producing our own educational videos about the h-index, we assessed the landscape of existing videos on Youtube, reviewing 274 videos and coding 31 that met the inclusion criteria according to a codebook with 50 variables.

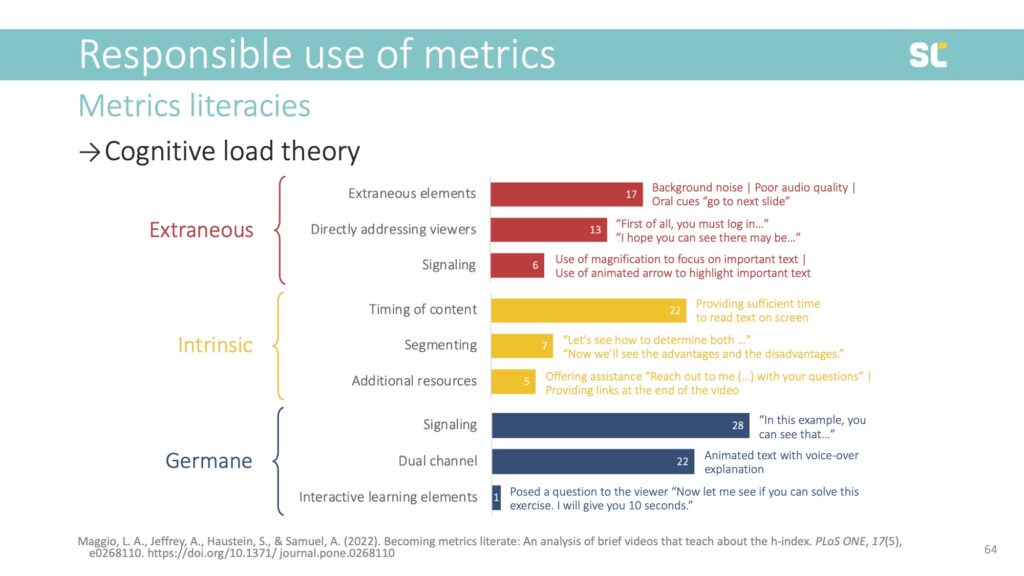

We used Cognitive Load Theory to assess the videos:

- Extraneous cognitive load requires cognitive processing that is not related to learning and should be reduced. This includes non-productive distractions (e.g., background noise).

- Intrinsic cognitive load is associated with inherently difficult tasks or topics, this burden can be reduced by breaking down content (e.g., segmenting).

- Germane cognitive load refers to the resources required to facilitate learning, which can be enhanced by presenting content multi-modally (e.g., dual channel).

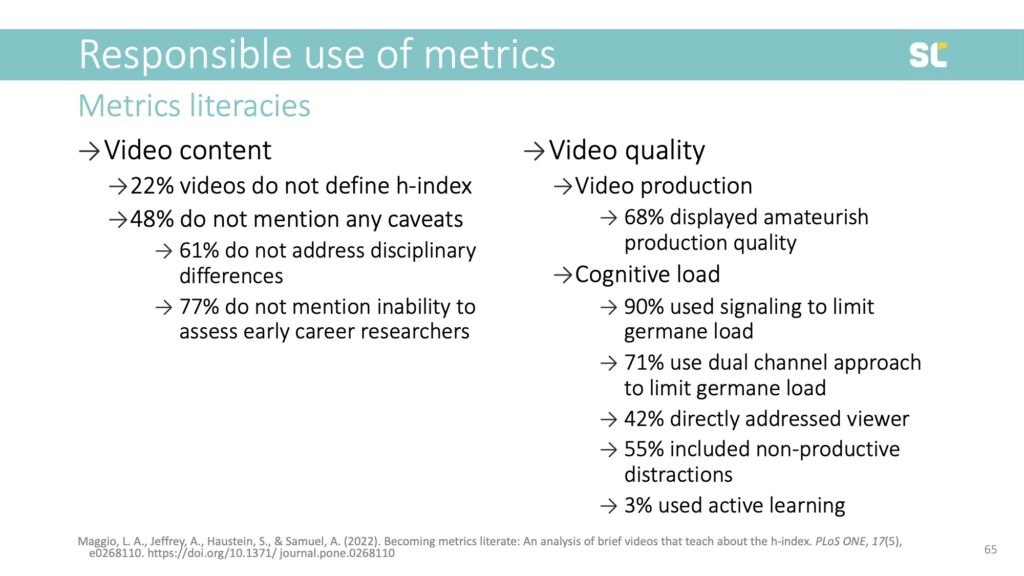

We found that among existing videos about the h-index, 22% do not define the metric and almost half do not address any of its caveats. For example, 61% do not address disciplinary differences and 77% do not mention its inability to assess early career researchers. This is highly problematic because it further promotes the inappropriate use of this flawed indicator.

From the perspective of production quality, we found that most videos seemed amateurish and more than half contained non-productive distractions. Only one of the 31 coded videos used active learning elements.

We are currently preparing the production of three videos about the h-index, using different educational video formats (e.g., talking head and animations) to set up a randomized controlled trial to test their effectiveness against the control—a regular academic text, which represents the traditional modality of scholarly communication but is believed to be less efficient and engaging as an educational resource.

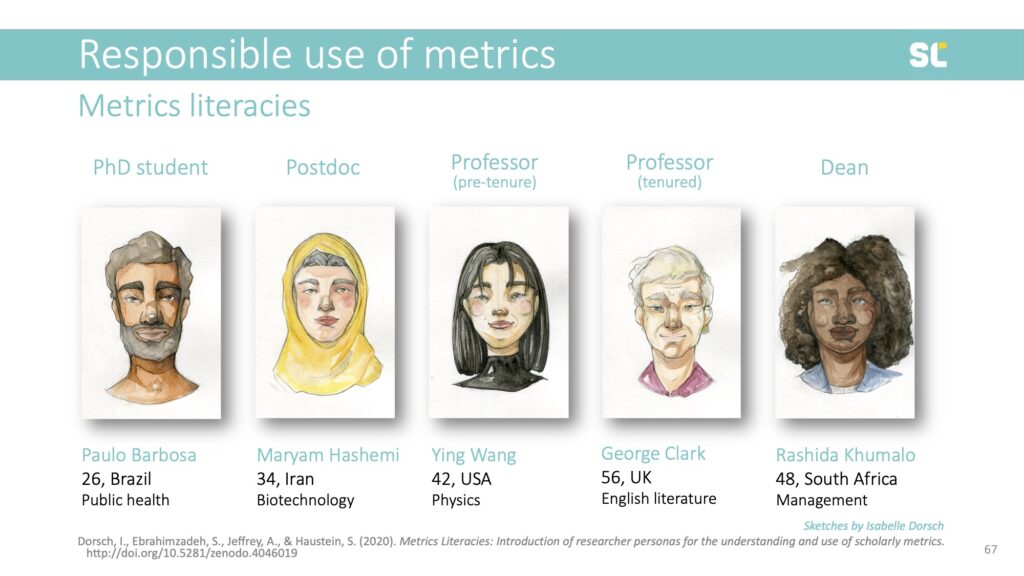

We developed personas and are employing storytelling elements to improve retention and make the videos more engaging. The personas come from different cultural and disciplinary backgrounds, and are at various career stages and roles to allow viewers to identify with them.

We believe that it needs experts in scientometrics, online education and video producers to work together to fill the current gap in metrics education in order to promote the responsible use of metrics in academia.

Conclusions

To wrap up this talk, I would like to summarize what I think are the most important opportunities and challenges of scientometrics today.

Opportunities

1. New citation databases. Current developments of new citation databases, particularly the community-led open ones, are exciting as they can potentially make research evaluation more inclusive and diverse, in terms of the researchers and types of research outputs considered.

2. Full text indexing. With more and more publications being available open access and in machine-readable format, there is a huge opportunity to further develop scientometric indicators taking into account citation context or even by complementing formal citations listed in the reference lists with mentions in the full text (as I have shown for capturing research data reuse).

3. Evidence base for changing policy. There is a huge opportunity for scientometric methods and experts to be involved in creating an evidence base to monitor science policy changes, particularly in the context of open science, open access, and research assessment reform, where policies explicitly emphasize the important role of meta research.

Challenges

1. incomplete or incorrect metadata. Incomplete or incorrect metadata may lead to the same or similar bias and lack of visibility for research from particular disciplines, countries, or publishers. I think it is up to us as the scientometric community to contribute and invest in improving this open infrastructure.

2. Data-driven indicator development. There is still a challenge of scientometric indicator development being almost exclusively data-driven, measuring what is easy to measure instead of what is desirable.

3. Misuse of metrics. Simplistic and often flawed indicators—such as the h-index, JIF, or even absolute citation counts—are all easier to obtain for the average user than more sophisticated normalized metrics and benchmarks.

4. Dominance of for-profit companies that continue to control infrastructure. Finally, I want to emphasize that despite the diversification of scientometric data providers and the growth of open infrastructure, the market of academic publishing and research evaluation is still dominated by a few for-profit companies that control academic prestige and impact.

Thank you!

Check out the Swiss Year of Scientometrics blog for recordings of Stefanie’s lecture and workshop. Visit Zenodo for all Metrics Literacies research outputs.