Three questions with... Natascha!

Our lab is growing! In our Three Questions series, we’re profiling each of our members and the amazing work they’re doing.

Today’s post features Natascha Chtena, a postdoctoral fellow and research coordinator for the Value of Openness, Inclusion, Communication, and Engagement for Science in a Post-Pandemic World (VOICES) project at the ScholCommLab. In this post, she reflects on cultivating openness and equity in higher education, tackling conventional scholarly publishing, and more.

Q#1 What are you working on at the lab?

I am working with Juan Pablo Alperin and a team of international researchers on a Transatlantic Platform (T-AP) funded project that examines equity, inclusion and engagement in open science in light of the COVID-19 pandemic. What’s innovative about our project is that it considers open research and public science communication as interconnected along a continuum of access, instead of distinct areas with separate concerns, goals, and target audiences.

For a long time, the open movement in higher-ed (OA, open data, etc.) has placed too much emphasis on published outputs and not enough on how these outputs are communicated to and engaged with by the public. That’s not surprising given how deeply engraved the injunction to “publish or perish” is within the academic system, but I think the COVID-19 pandemic has provided a fertile opportunity for researchers to think about social responsibility and their role in promoting effective public engagement with science.

For those of us actively engaged with open access/source/etc. projects, it’s also given us an impetus to rethink what we really want openness to achieve and for whom. I’m a firm believer in the need to make scientific research not just open access, but also as accessible, inclusive and equitable as possible, and I’m incredibly lucky to be working with a team of like-minded colleagues, with whom I share both interests and an ethos.

Q#2 Tell us about a recent paper, presentation, or project you’re proud of.

I spent the last two years building up the HKS Misinformation Review, a diamond OA journal that publishes timely research on misinformation and on measures aimed at combating it. Our main goal with this project was to create a forum—the first of its kind— where researchers and practitioners from different fields would come together, exchange ideas, and effectively respond to the misinformation crisis.

We also wanted to tackle two long-standing issues of conventional scholarly publishing: (1) unnecessarily long review and publication times (which render much research outdated by the time of publication), and (2) barriers to access for non-scholarly audiences that extend beyond paywalls and poor findability. That’s why we developed a “fast-review” system, with a goal of moving from submission to publication within 1-2 months, along with an innovative, short-form article format that features the most important insights and implications upfront, so they are clearly visible to non-specialists.

I worked day and night to see the project through, and I’m so proud of what the journal has become and what we have accomplished over a very short period of time.

A highlight was when the Misinformation Review was featured on Last Week Tonight with John Oliver!

Q#3 What’s the best (or worst) piece of advice you’ve ever received?

I think advice is overrated, honestly, and so much of it is projection. Plus, nothing can replace the insights of lived experiences. Having said that, when I was training in rescue diving many moons ago, my instructor said: “Whatever happens, don’t panic.” That’s solid advice in any circumstance :)

Read more about Natascha on her website or find her on Twitter at @nataschachtena.

Becoming Metrics Literate: New Study Examines Videos on the H-index

Scholarly metrics are widely applied to assess research quality and impact despite their known limitations. One of the most popular scholarly metrics is the h-index—which is defined as the “h number of papers with at least h number of citations.” This means that if a researcher has an h-index of 12, they have published 12 papers with at least 12 citations each.

Although simple, the h-index is flawed and often applied inappropriately. It's been criticized due to inconsistencies, lack of field normalization, database-dependence, and time-dependence. Yet the h-index continues to be used for hiring, review, and tenure.

Lauren Maggio, professor of medicine at the Uniformed Services University of Health Sciences, has witnessed how academics misuse metrics in high-stakes decisions. Across her career, sitting on many hiring committees, she has “unfortunately seen candidates compared in relation to their h-index,” despite its shortcomings.

“It’s really important that people understand how these metrics are created, that they understand some of the biases and issues that are baked into these metrics that may disadvantage some groups,” she says. In this post, Lauren tells us about the ScholCommLab’s recent work characterizing available educational videos on the h-index.

“It’s really important that people understand how these metrics are created, that they understand some of the biases and issues that are baked into these metrics that may disadvantage some groups.”

Lauren Maggio

Improving Metrics Literacies through educational videos

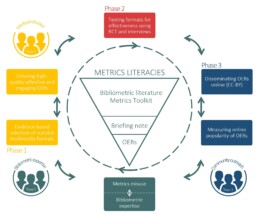

The project, led by Stefanie Haustein, co-director of the ScholCommLab, aims to improve the appropriate use of scholarly metrics in academia. The team is developing educational videos and evaluating their efficiency in a randomized controlled trial to teach academics “Metrics Literacies,” the skills and knowledge to recognize, interpret, critically assess, and effectively and ethically use scholarly metrics.

The multidisciplinary team consists of bibliometric experts, video producers, and online education specialists, including Lauren. “Stefanie asked me to jump on this because of some of the work I’ve been doing in the medical education space,” she says. They decided to start by developing open educational resources about the infamous h-index.

Before making their own content, the team assessed the landscape of existing educational videos on the h-index. By understanding available resources, Lauren believes that they could “get a sense of how people were actually talking about the h-index, what it is, issues with it” to identify content for their own future initiatives. “We could find good things to do for our own videos or bad things that we knew we wanted to avoid,” she added.

In their first study, recently published in PLOS ONE, the Metrics Literacies team examined the characteristics of available educational videos on the h-index. They identified more than 247 videos to get a sense of how the h-index is described and presented. “We watched a ton of YouTube,” laughed Lauren.

Only 31 videos met the inclusion criteria (English-speaking, less than 10 mins long, and actually about the h-index). At least two of the authors independently coded each of the videos based on their content, presentation style, and educational quality.

Specifically, the team used Cognitive Load Theory—an instructional theory to guide educational design—to evaluate whether the video format actually helped people learn. Based on this theory, ideal educational videos should be designed to balance three types of cognitive load:

- Reduce extraneous load—unnecessary design features that prevent new information from being processed (e.g., background noises)

- Minimize intrinsic load—the inherent difficulty of new information. Complex tasks cannot be made easier, but the learning burden can be reduced by breaking down content (e.g., segmenting)

- Increase germane load—resources required to facilitate learning. Presenting content in different ways enhances learning (e.g. using audio to explain something, while also showing it with video)

Their results suggest that when it comes to building literacies about the h-index, the scholarly community has a long way to go.

Most h-index videos use elements to balance cognitive load

Most of the videos used elements to balance cognitive load. 90% of videos used signaling (or cues highlighting important details) and 71% used dual channel approaches (like animated text with voice-over explanations) to enhance cognitive processing and reduce germane load. 42% of videos actually addressed the viewer, reducing extraneous load.

Interestingly, only one video included an interactive learning element. 97% of videos did not pause to ask a question, pose a reflection, or attempt a calculation—which are tools that can facilitate the learning process. The lack of interaction in the videos could be due to the nature of the medium, but Lauren believes “It is possible to do those things. They just weren’t taking advantage of that.”

The research also made it clear that there is lots of room for when it comes to minimizing distractions unrelated to learning. 55% of video included non-productive distractions, which increases extraneous load. Lauren found the distractions to be extreme in several of the videos. “You could hear birds, pretty loud, that would just really distract you, or they would be doing a screencast and just be moving their mouse everywhere,” she recalls. She recommends that future initiatives should try to be more mindful of reducing these extraneous elements.

Current videos hardly mention inconsistencies or usefulness of the h-index

While most videos defined or calculated the h-index, 48% of videos did not mention any caveats. There were no mentions of disadvantages to early career researchers (77%), database differences (71%), or disciplinary differences (61%). In fact, Lauren saw that “There were presenters very much proposing and helping viewers to think about how to boost their h-index, which shouldn’t really have been a surprise.” The lack of critical reflection in the videos fails to provide transparency on the shortcomings of the h-index.

Available videos on h-index appear amateurish

68% of the videos displayed amateurish production quality. These videos had poor camera angles, audio quality, and editing, which added distractions and disrupted the learning experience. In contrast, the professionally produced videos the team identified flowed smoothly, incorporating images and animations with scripted dialogue. For the team, this findings highlights the importance of involving online education specialists and video producers for developing future videos.

A bright future for the Metrics Literacies project

Thanks to funding from SSHRC, Lauren is excited for what’s next: creating and testing three educational videos on the h-index. “This was really the background work. Now we’re starting to think about how we actually create the video and how we are going to measure what’s happening.”

But the road toward making people metrics literate doesn’t stop here. All of the Metrics Literacies’ resources are open through Zenodo. Lauren envisions that anyone could adopt their approaches and materials, using them to improve literacies about other scholarly metrics. In her words, “If we care about science itself, and we want science to be sound and ethical, then we really should be very mindful of what we're doing with our metrics.”

To stay up to date with the Metrics Literacies project, sign up for the ScholCommLab newsletter. Visit Zenodo to access all of the Metrics Literacies outputs.

Rethinking Research Assessment for the Greater Good: Findings from the RPT Project

The review, promotion, and tenure (RPT) process is central to academic life and workplace advancement. It influences where faculty direct their attention, research, and publications. By unveiling the RPT process, we can inform actions that lead towards a greater opening of research.

Between 2017 and 2022, we conducted a multi-year research project involving the collection and analysis of more than 850 RPT guidelines and 338 surveys with scholars from 129 research institutions across Canada and the US. Starting with a literature review of academic promotion and tenure processes, we launched six studies applying mixed methods approaches such as surveys and matrix coding.

So how do today's universities and colleges incentivize open access research? Read on for 6 key takeaways from our studies.

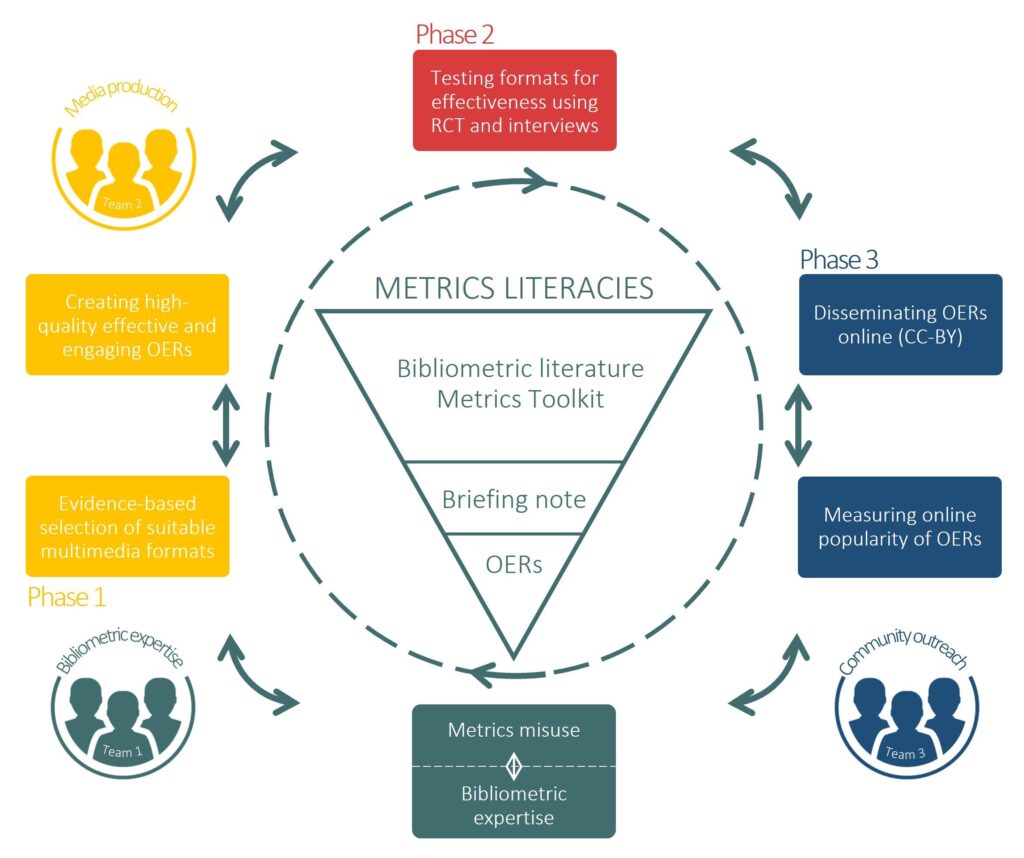

1. Faculty assessments do not align with institutional goals

In a study published in eLife in 2019, we assessed the degree to which RPT documents included guidelines specific to open access, open data, and open education.

We found that while institutions want faculty to engage in public outreach, there are no explicit incentives or structures to assess contributions to public scholarship. Faculty were rewarded for traditional research outputs and citations. “Public” and “Community” are terms often mentioned in RPT documents, yet outputs of public scholarship were viewed as service contributions—not research. Only 5% of institutions mentioned “Open Access” in their RPT documents.

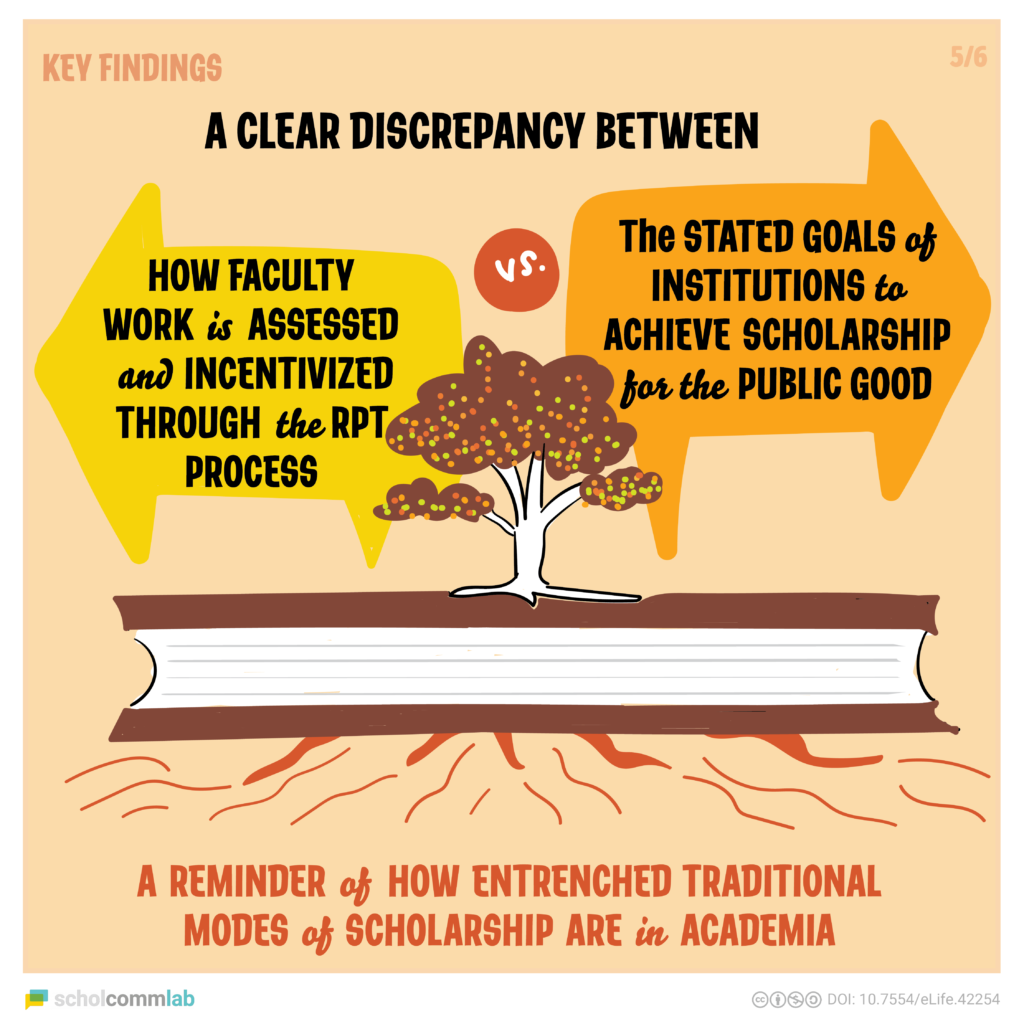

2. Nontraditional outputs are undervalued in the RPT process

So if there were no incentives for public scholarship, what kinds of outputs were mentioned in the RPT guidelines? In this book chapter for the Open Handbook of Linguistic Data Management (2020), we explored how non-traditional outputs are assessed in promotion and tenure.

Around 95% of institutions mentioned traditional outputs such as journal articles and book chapters. A diversity of research outputs, such as open data sets, software, or works in progress, were found in RPT documents extending across institution types and disciplines. While research activities are beginning to be recognized more broadly, our findings suggest that current structures of faculty assessment have yet to recognize the value of non-traditional scholarly outputs.

3. Definitions of "quality," "impact," and "prestige" are vague and circular

In this study (published in PLOS in 2021), we wanted to know how faculty define common research terms used in RPT documents.

We found that faculty often defined "quality," "impact," and "prestige” in circular and overlapping ways. Researchers and their colleagues applied their own—and often different—understanding of these terms. Interestingly, the varying definitions did not relate to age, gender, or academic discipline. Our findings suggest that we can’t rely on these ill-defined and highly subjective terms for evaluation.

4. Impact factor persists in academic evaluations, despite its limitations

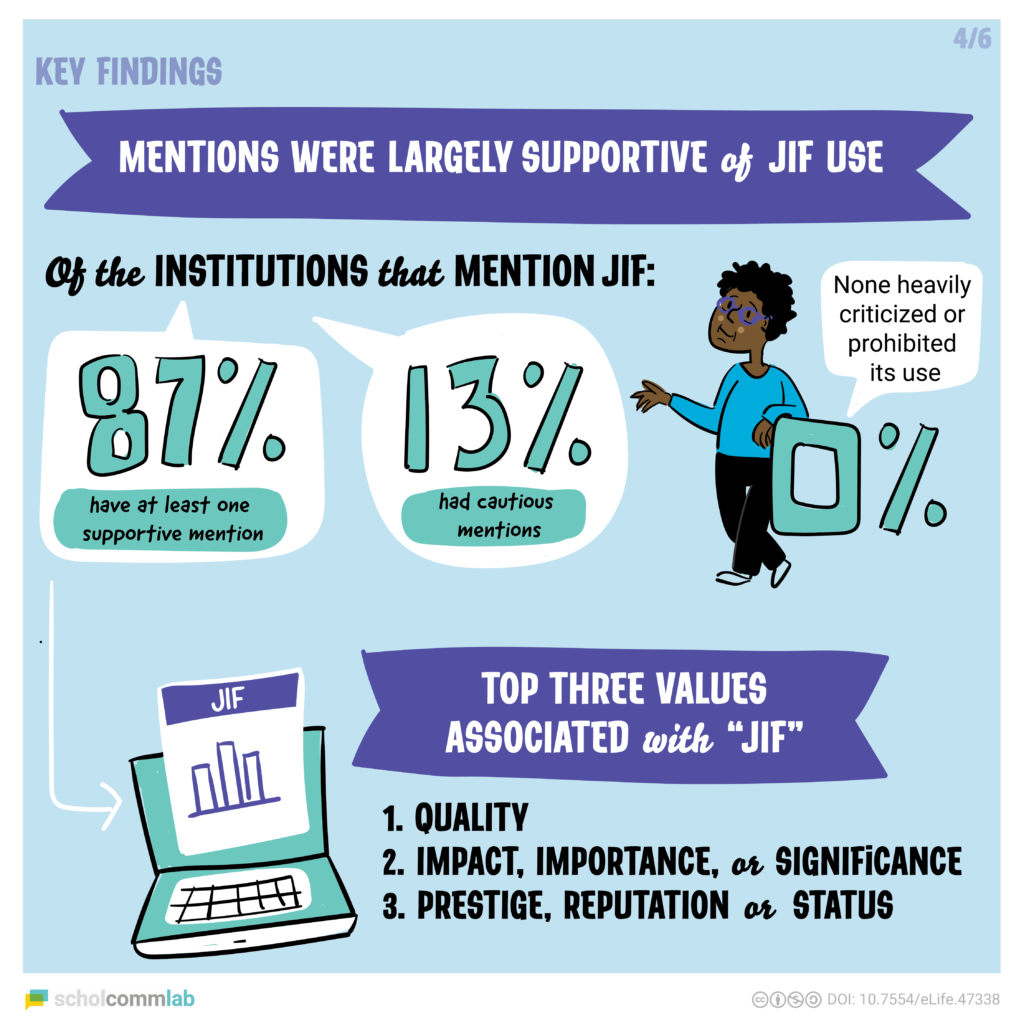

In a study published in e-Life in 2019, explored the use of Journal Impact Factor (JIF) in RPT documents. We analyzed how faculty defined and mentioned this controversial metric.

We found that only 23% of institutions mentioned “impact factor” explicitly in the RPT documents. While this may seem low, there were over a dozen terms used that faculty may associate with JIF without stating it explicitly, including: “prestigious journal,” “leading journal,” and “top journal.” Despite little to no evidence that JIF is a valid measure of research quality, there is still pressure to publish in high-JIF venues, which limits the adoption of open access publishing.

5. Faculty prioritize readership but think their peers value prestige

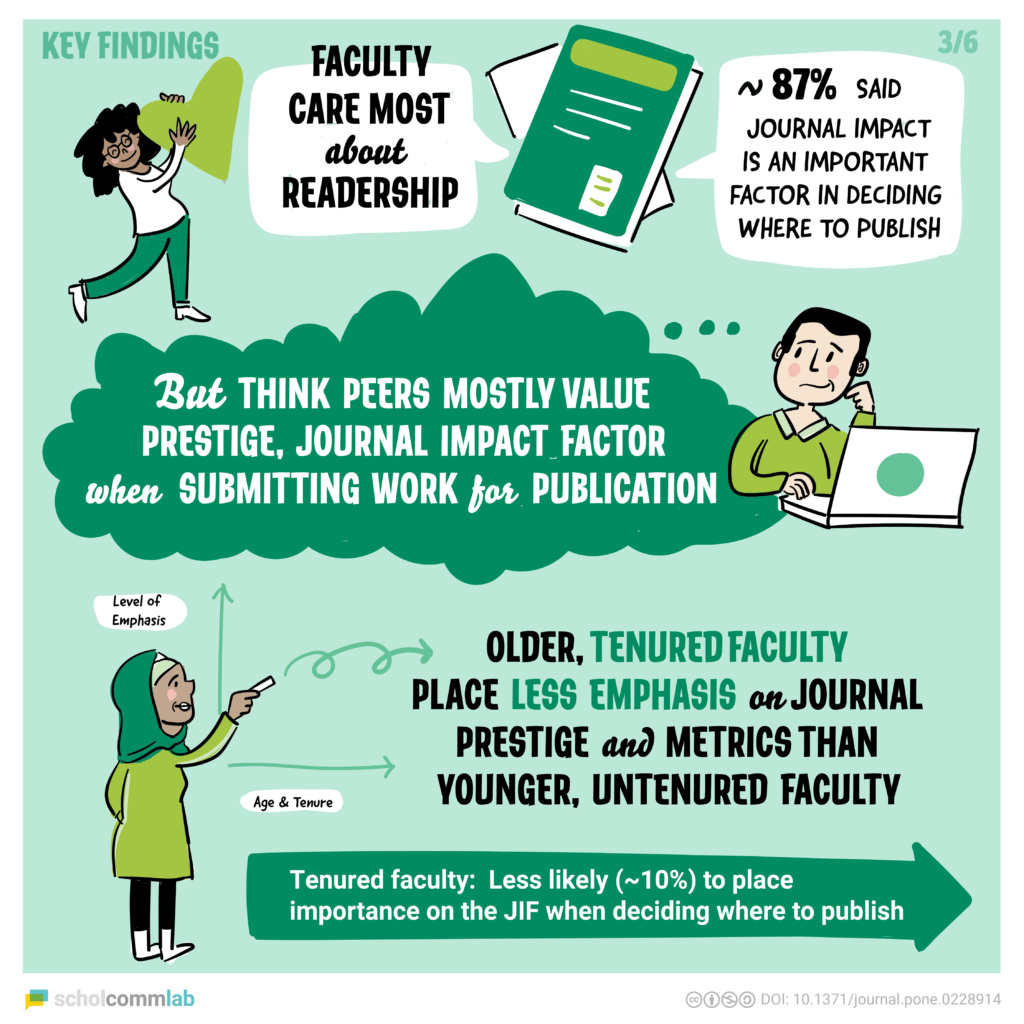

In another publication (published in PLOS in 2020), we examined what drives faculty to publish where they publish. The results were surprising.

While faculty prioritize readership when submitting their work for publication, they believe their peers are driven by journal prestige and metrics (such as impact factor). Next to readership, faculty cared about journal prestige and journals which their peers read. As for the RPT process, faculty thought they would be evaluated based on publication quantity and prestige—but this perception varied across age, career stage, and institution type.

6. Collegiality: a double-edged sword in tenure decisions

In our sixth—and final—publication (published this year in PLOS), we explored whether and how “collegiality” is used in the RPT process.

While “collegiality” was hardly ever defined in RPT documents, faculty we surveyed said that it plays an important role in the tenure process. Faculty from research-intensive universities were more likely to perceive collegiality as a factor in their RPT processes. Our results suggest that clear policy on “collegiality” is needed to avoid punishing those who don’t "fit in."

Reimagining the future of review, promotion, and tenure

Since starting this project more than 5 years ago, our papers, blog posts, and tweets have been viewed and shared thousands of times. They’ve been covered in more than 15 news stories and blog posts, including in Nature, Science, and Times Higher Education. We’re hopeful that this wide visibility is a first step towards reimagining the RPT process so that it better reflects the types of scholarship that faculty members themselves want to see in the world.

So where do we go from here?

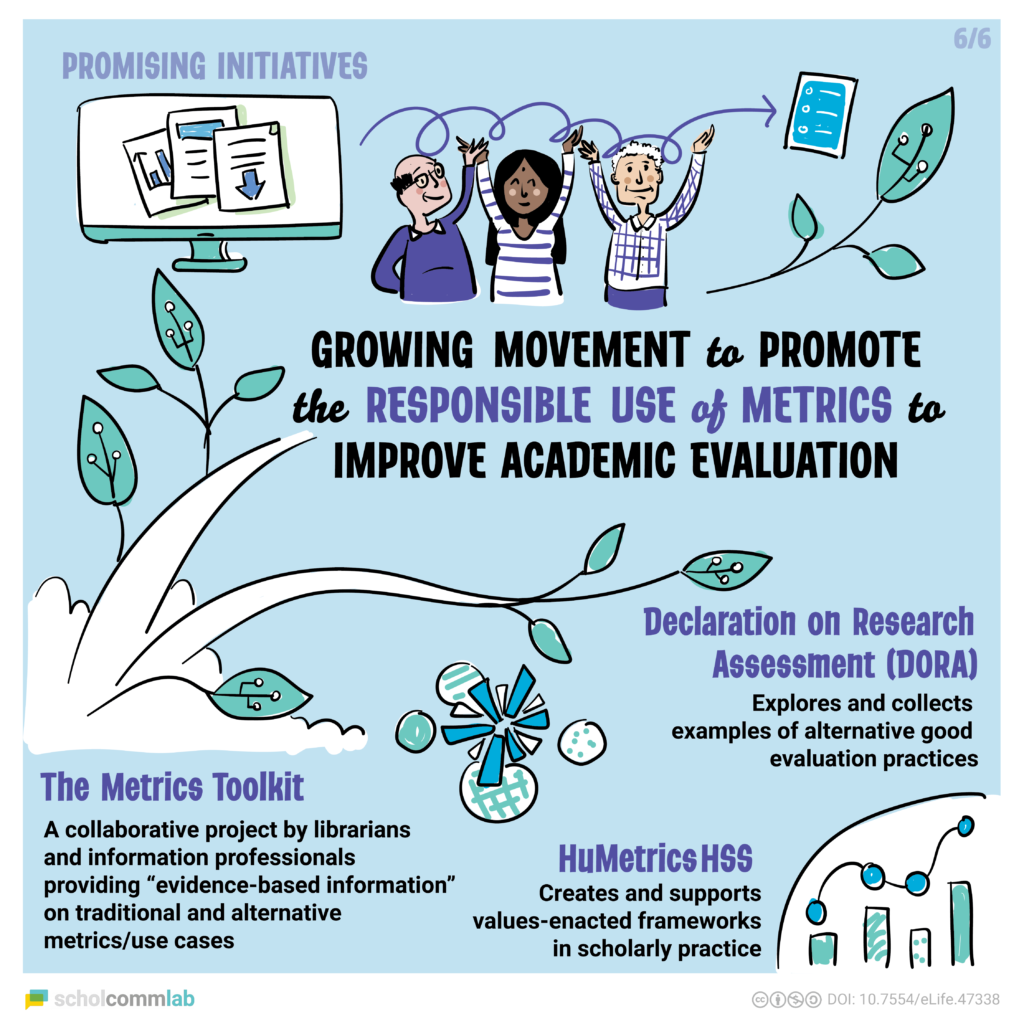

In all of our papers, the overarching conclusion is the same: There's room for values-based assessments in the RPT process. We need to move away from measuring outputs or judging faculty by ill-defined concepts and move towards rewarding the values, processes, and ways in which people work. As we bring the RPT project to a close, we’d like to highlight two initiatives that have started to pave the way:

1. The Humane Metrics in the Humanities and Social Sciences (HuMetricsHSS) initiative generates and supports values-based approaches for scholarly life including review, promotion, and tenure. Their paper, Walking the Talk: Toward a Values-Aligned Academy, offers a set of recommendations for making wide-scale change to improve and strengthen the impact of all scholarly work. It features work by our lab, challenges academics to reform and rethink the RPT process, and suggests ways to align faculty expectations with institutional values. We invite you to read HuMetricsHSS’ work and reflect on the values that you would like to see considered in your institution’s RPT process.

2. The Declaration on Research Assessment (DORA) is a worldwide initiative recognizing the need to improve the ways in which researchers and the outputs of scholarly research are evaluated. They offer recommendations, resources, and case studies on best practices for research assessment. We encourage you and your institution to read, sign, and adopt DORA, to improve the evaluation of scholarly output.

Finally, we hope this work inspires you to reflect on your own role in upholding the status quo in the RPT process—and the power you have to change it. As Juan Pablo Alperin says “I invite you to begin a conversation by sharing with your colleague why access to research matters. Because it does.” By sharing our values and thoughts on the RPT process, we can cultivate an academic culture that rethinks research assessment for the greater good.

We would like to thank and acknowledge the team behind the RPT project which includes: Erin McKiernan, Meredith Niles, Lesley Schimanski, Carol Muñoz Nieves, Lisa Matthias, Michelle La, Esteban Morales, Diane (DeDe) Dawson. Without your contributions, this work would not have been possible!

For more about the review, promotion, and tenure project, check out the project website, visual overview, blog posts, and media coverage.

Three questions with… Fatou!

Our lab is growing! In our Three Questions series, we’re profiling each of our members and the amazing work they’re doing.

This week's post features Fatou Bah, a master's student in the School of Information Studies (ÉSIS) at the University of Ottawa (UOttawa), Data Support Specialist in Research Data Management at the UOttawa Library, and research assistant at the ScholCommLab. She tells us about her work in cognitive accessibility, taking her own advice, and more.

Q#1 What are you working on at the lab?

I just joined the lab and will be working on my master’s thesis with Stefanie Haustein. My research background is in cognitive accessibility—helping people of all cognitive abilities process information. I believe everyone should have access to information regardless of their disability status. My research focuses on how database accessibility impacts the search behaviour of academics with cognitive disabilities. This is especially important for individuals working and studying in higher education, where searching through databases is critical to completing their work.

Q#2 Tell us about a recent paper, presentation, or project you’re proud of.

I recently had the opportunity to guest lecture, which was exciting. It was for an ethics and social responsibility course. I discussed how technology supports cognitive function and the barriers to adopting this technology (e.g., lack of cognitive accessibility). I also talked about how persons with cognitive disabilities are at higher risk of privacy threats. Caution needs to be exercised when developing technologies for this population.

Q#3 What’s the best (or worst) piece of advice you’ve ever received?

That’s a tough one. I can’t recall any instance where someone has given me advice on something or where I asked for someone’s advice. If someone has, clearly I haven’t taken it.

Find Fatou on Twitter at @Fatou_Bah1.